the secret list of websites Chris Coyier

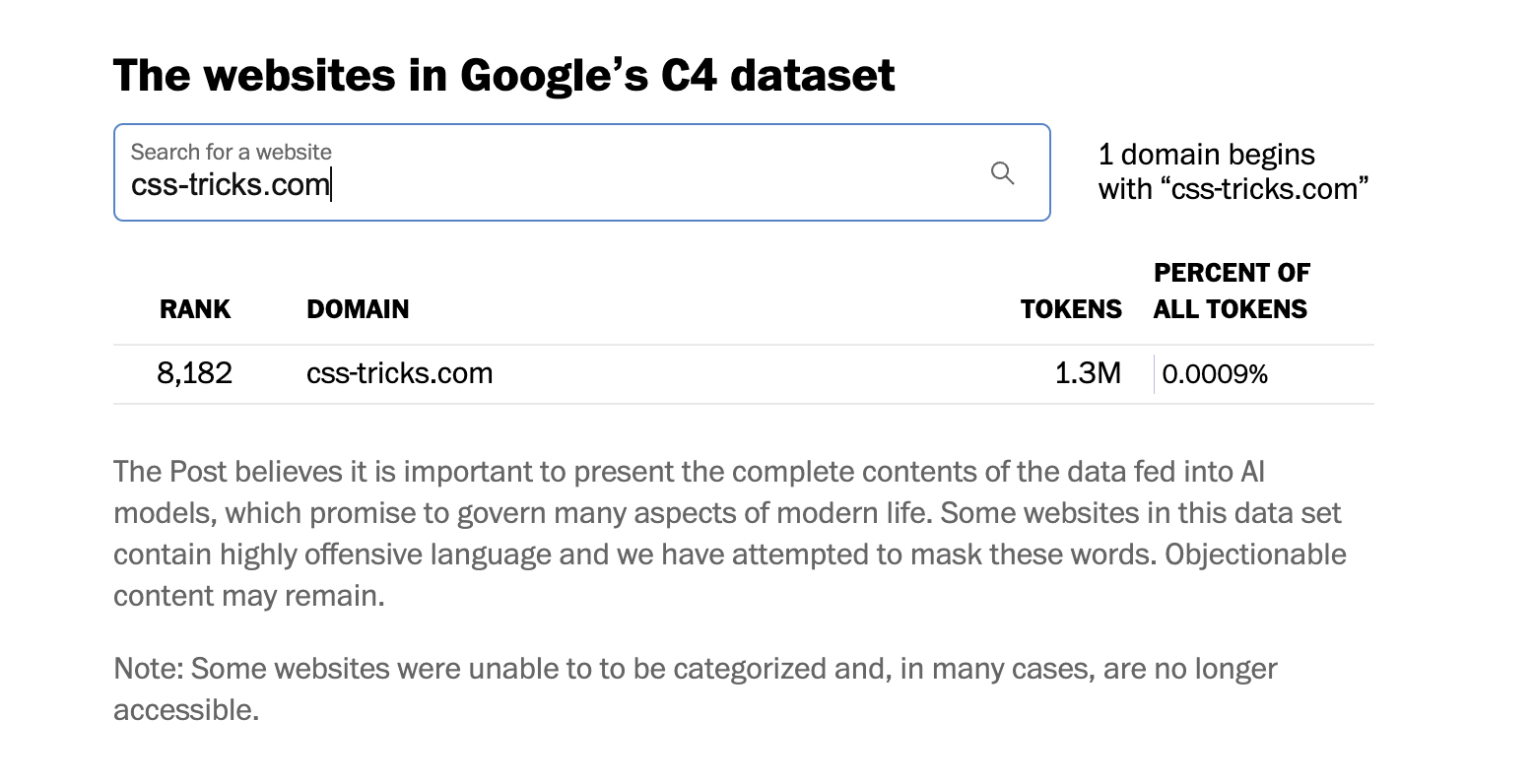

The Washington Post does research to figure out which websites were used to train Google’s AI model:

To look inside this black box, we analyzed Google’s C4 data set, a massive snapshot of the contents of 15 million websites that have been used to instruct some high-profile English-language AIs, called large language models, including Google’s T5 and Facebook’s LLaMA.

Inside the secret list of websites that make AI like ChatGPT sound smartMy largest corpus of writing to date is on the web at css-tricks.com (along with many other writers), so naturally, I’m interested in seeing if it was used. (Plus, I’ve been seeing people post their rank as a weird nerd flex, so I’m following suit.)

Despite Google’s employees serious misgivings (just little stuff like the information presented leading to “serious injury or death”), Google has publicly launched their Bard tool and are very serious about investing in AI.

Me, I just think it’s fuckin’ rude.

Google is a portal to the web. Google is an amazing tool for finding relevant websites to go to. That was useful when it was made, and it’s nothing but grown in usefulness. Google should be encouraging and fighting for the open web. But now they’re like, actually we’re just going to suck up your website, put it in a blender with all other websites, and spit out word smoothies for people instead of sending them to your website. Instead.

And while doing that, they aren’t:

- Telling authors their content is being used to train

- Telling users where the output came from

- Offering any meter of how reliable or confidently correct the output is

So, I’m critical. It’s irresponsible.

But I’m not a neo luddite or whatever on this. It’s all certainly interesting. I like that these tools are almost immediately useful and pouring over with use cases. Heck, I needed a quick CSS rainbow gradient the other day, and the output from Bard was quick and useful. I’m a GitHub Copilot paying customer and I’m 100% sure it makes me a faster and better coder. I’m nervous about lots of things related to (massive air quotes) “AI” but I’m hopeful it can do some good.

On being critical though, here’s Manuel Moreale:

… I do enjoy reading news and discussions when politics and technology are both involved. I especially enjoy reading people’s perspectives on these topics. One thing I’m noticing more and more though, is that most people are quick to point out what’s wrong about something, but almost never offer solutions or alternatives.

And that is because complaining or pointing fingers is the easy part. Figuring out alternatives is hard

Criticising is the easy partSo here’s what I’d like to see done:

- Stop firing ethics people. What is it, three times now?

- Be very open about what content a model is trained on, and at least allow people to opt-out. Better — opt in.

- Credit and link to the sources directly in the output where possible.

- Operate this part of the business as profit neutral.

Related

ncG1vNJzZmibmKe2tK%2FOsqCeql6jsrV7kWlpbGdgaXxzfY6tn55lo5qws7HTZqOiq6RivKd51p6ZrKGkmsBw